Uncanny

uncanny

Pronunciation: /ʌnˈkani/

Adjective

1 Strange or mysterious, especially in an unsettling way.

‘an uncanny feeling that she was being watched’

Source Oxford Dictionaries

—

uncanny (adj.)

1590s, “mischievous;” 1773 in the sense of “associated with the supernatural,” originally Scottish and northern English, from un- (1) “not” + canny. In late 18c. canny itself had a sense of “possessed of supernatural powers, skilled in magic.”

Source Etymology Online

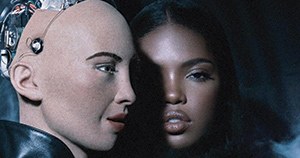

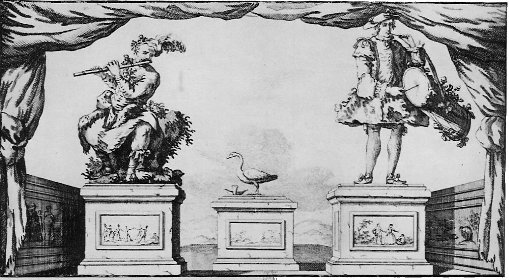

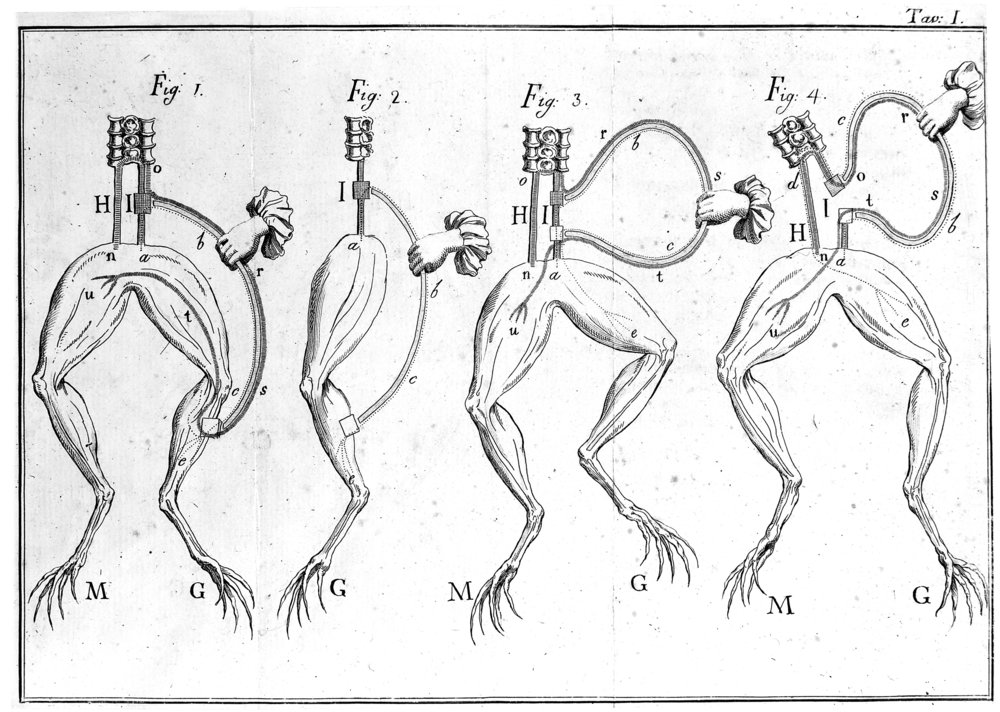

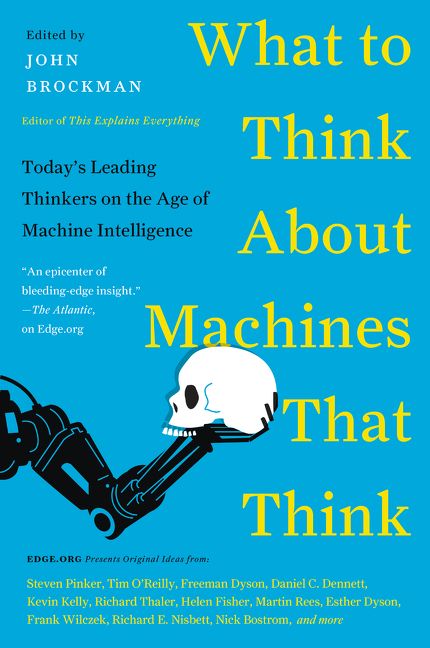

The will to automate material, be it from clay, expired flesh, marble or rubber, has long permeated the social imaginary of humans. Stories of desire and power – from Pygmalion’s awakening kiss upon the marble form of his ideal woman 1, to Sophia the Robot modelled on Audrey Hepburn, create a lineage of icarus-like tendencies to form autonomous intelligence from seemingly inanimate materials.

The material with which we form – artificial intelligence (AI) — is already in some ways becoming ‘alive’. AI is trained to perceive, seeing what its gaze is directed towards, experiencing the world around it, developing as we develop. As it is informed not only by human interaction, but also by the order created within society through the design of objects, systems, images, binaries and style 2, is it becoming difficult to differentiate the creator from the creation?

To combat the fear of a possible loss of control, artificial intelligence is designed into recognisable contraptions, which aim to seamlessly slip into daily life unnoticed, learning to recognise and feed our desires and needs 3. Developing from the common senses with which we interpret reality, from sight to hearing to touch, these contraptions form ‘ideals‘ created from sometimes misdirected desires. We are now able to shape our common human senses – to extract them from ourselves and create new uses for them. How should AI be informed and who holds the responsibility for this?

Design is a slippery interface between humans and technology creating a series of real-time glitches in our daily lives – from driverless cars running red lights, to Amazon Alexa’s unprompted and unexpected laughter, to Miquela Sousa with 1.4 million followers on Instagram. In a feedback loop, this enables a mirror-like experience where our realities are reflected back to us. How do we relate to ‘strange’ technological occurrences which make up our hybrid realites?

Will artificial intelligence one day glean enough information from its creators to become a form of independent ‘intelligence’, just as Frankenstein’s monster did? If AI surpasses its training, forming its own identity, how would it design itself? We are perhaps at a time when the will to create new forms of ‘life,’ informed by our own behaviours and sensory capacity, is forcing us to see some irregularities in our constructed realities. We could inform AI to reinforce preconceived notions of the human condition, yet is there an opportunity to also unravel these notions, finding new ways to understand ourselves?

Uncanny Love

Do humans have the capacity to love their more-than-human creations? Jason Robert questions how humans relate to their creations in the uncanny world of biotechnology.

xyz

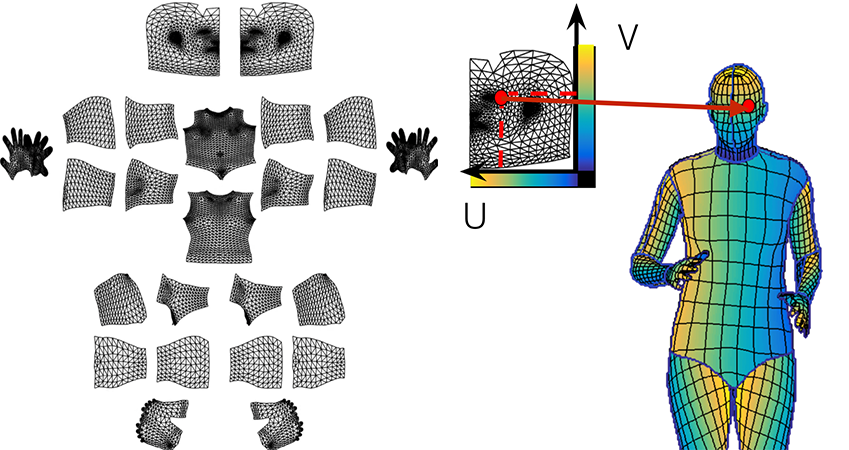

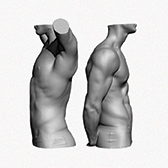

Explore a mutant inventory interrogating the consequences of Euclidean perspective of digital tools used to generate ‘bodies.’

Google Hom.., Crying Wom.., Man Crying, ASMR MOUTH..,

Everyday Facial Yoga

Train with an Emoji avatar to manipulate facial expressions and thwart the controlling potential of emotional recognition devices.

Recoding Voice Technology: Is a Feminist Alexa possible?

The voice of Alexa is everywhere. It is estimated that 70% of recorded voices in the UK are female or female sounding. But what are the consequences of having such gendered voices encoded into our space? And what is the potential of voice technology outside of commercial contexts? In this episode, we speak to the learning partners and students of the UAL Feminist Alexa workshop to explore what voice technology could be, and why we need an alternative to the default Alexa.

Boiling Wa.., Vacuum, Pill Bottl.., Love Islan.., Coming Out..,

Misplacing Values in the Age of AI

Could artificial intelligence replace human creativity entirely? Designer Penny Webb muses on the current status of artificial intelligence as part of the creative process.

Yeah Damn.

AI Influencers Lil Miquela and Blawko, have over one million instagram followers between them. Fictional Collective attempts to delve into what lies beneath their seductive images.

Tuda Syuda

Food has always terraformed, and landscapes have always created recipes. Two endlessly negotiating AI’s create bespoke dishes, while constructing the landscapes the ingredients come from.

Aesthetics of Exclusion

Navigating through ‘street view’ in Seoul, the extinction of objects symbolising informal economies are identified through machine vision.

(…I Contain Multitudes)

The futures of our identities are interlaced with the construction of our digital selves. Fictional Journal discusses with designer and researcher Simone C. Niquille.

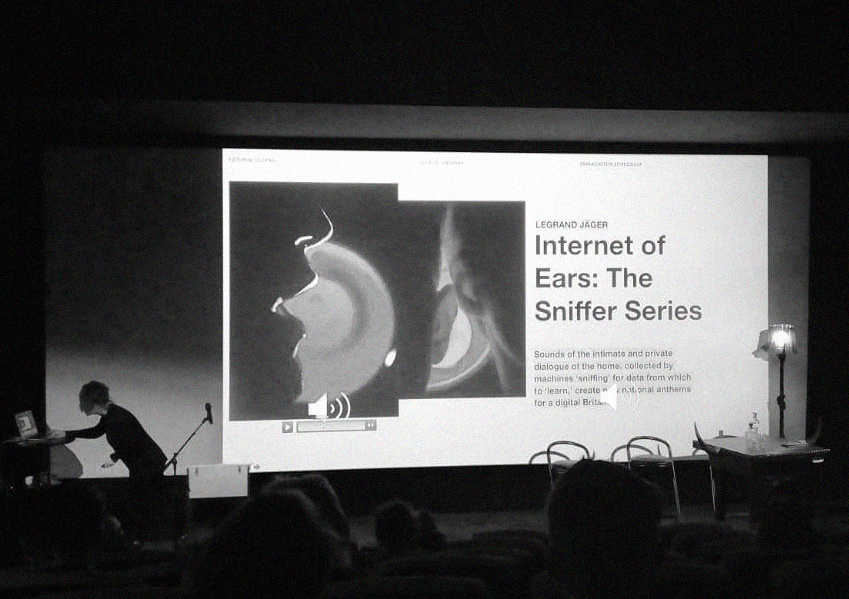

Internet of Ears: The Sniffer Series

Sounds of the intimate and private dialogue of the home, collected by machines ‘sniffing’ for data from which to ‘learn,’ create new national anthems for a digital Britain.

In no particular order.

→ IBM. 2018. Cimon.

→ Toro, Guillermo del. 2018. The Shape of Water. United States: Fox Searchlight Pictures.

→ Whale, David. 1931. Frankenstein. United States: Universal Pictures.

→ Moncler, T Brand Studio. 2018. Emotional Intelligence: Starring Sophia the Robot and Ryan Destiny.

→ Daft Punk. 2001 - Ongoing. Robot Helmets.

Israeli company DataGen creates fake hands like these to help others build artificial-intelligence programs. → Image Source: DataGen.

Dutch bank ING, in partnership with Microsoft, has created an AI which generates replications of Rembrandt’s famous paintings. ING, Microsoft. 2017. Next Rembrandt. The Netherlands.

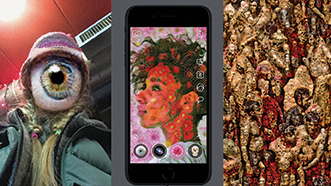

Genmo (short for Generative Mosaic) is an AI-powered app that recreates any photo or video using an entirely separate set of images. → Salavon, Jason. 2017. Genmo. University of Chicago: Latent Culture.

Jonze, Spike. 2018. Welcome Home: Apple HomePod. → Image source: YouTube

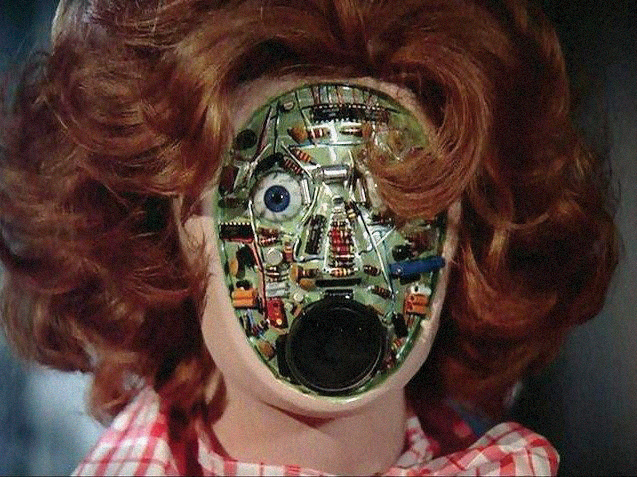

Roach, Jay. 1997. Austin Powers: International Man of Mystery. United States: New Line Cinema.→ Image Source: YouTube

→ Abrams, J.J. 2016 - Ongoing. Westworld.

Jonze, Spike. 2014. Her. United States: Warner Bros. → Image source: YouTube

Male Torso Scan Bundle. A set of four male torso scans with varying arm positions. Data Set includes four high-resolution OBJ’s, approximately 200k triangles each. → Image Source: Ten24

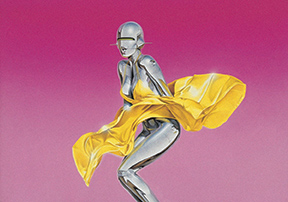

→ Sorayama, Hajime. 1982. Sexy Robots.

Tay was an artificial intelligence chatterbot that was originally released by Microsoft Corporation via Twitter on March 23, 2016; it caused subsequent controversy when the bot began to post inflammatory and offensive tweets through its Twitter account, forcing Microsoft to shut down the service only 16 hours after its launch.

Deepfake is an artificial intelligence-based human image synthesis technique. It is used to combine and superimpose existing images and videos onto source images or videos. For example, deepfake pornographic films have been made of Gal Gadot having sex with her stepbrother in 2017, and other celebrities such as Emma Watson, Katy Perry, Taylor Swift, or Scarlett Johansson.

High quality realistic rigged female legs. Originally created with 3ds Max 2010 using the Mentalray render. Model is built to real-world scale. Legs are 84cm tall. → Image Source: Turbosquid

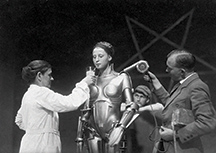

→ Actress Brigitte Helm taking a break from her alter-ego, the Maschinenmensch, in Fritz Lang’s 1927 silent film Metropolis.

→ Lang, Fritz. 1927. Metropolis. Germany: Ufa.

→ Lagrenée, Louis Jean François. 1781. Pygmalion and Galatea. Oil on canvas, without picture frame. 56.7 x 44.5 cm.

→ Hanson Robotics, KAISt Hubo Group. 2006. Albert Hubo.

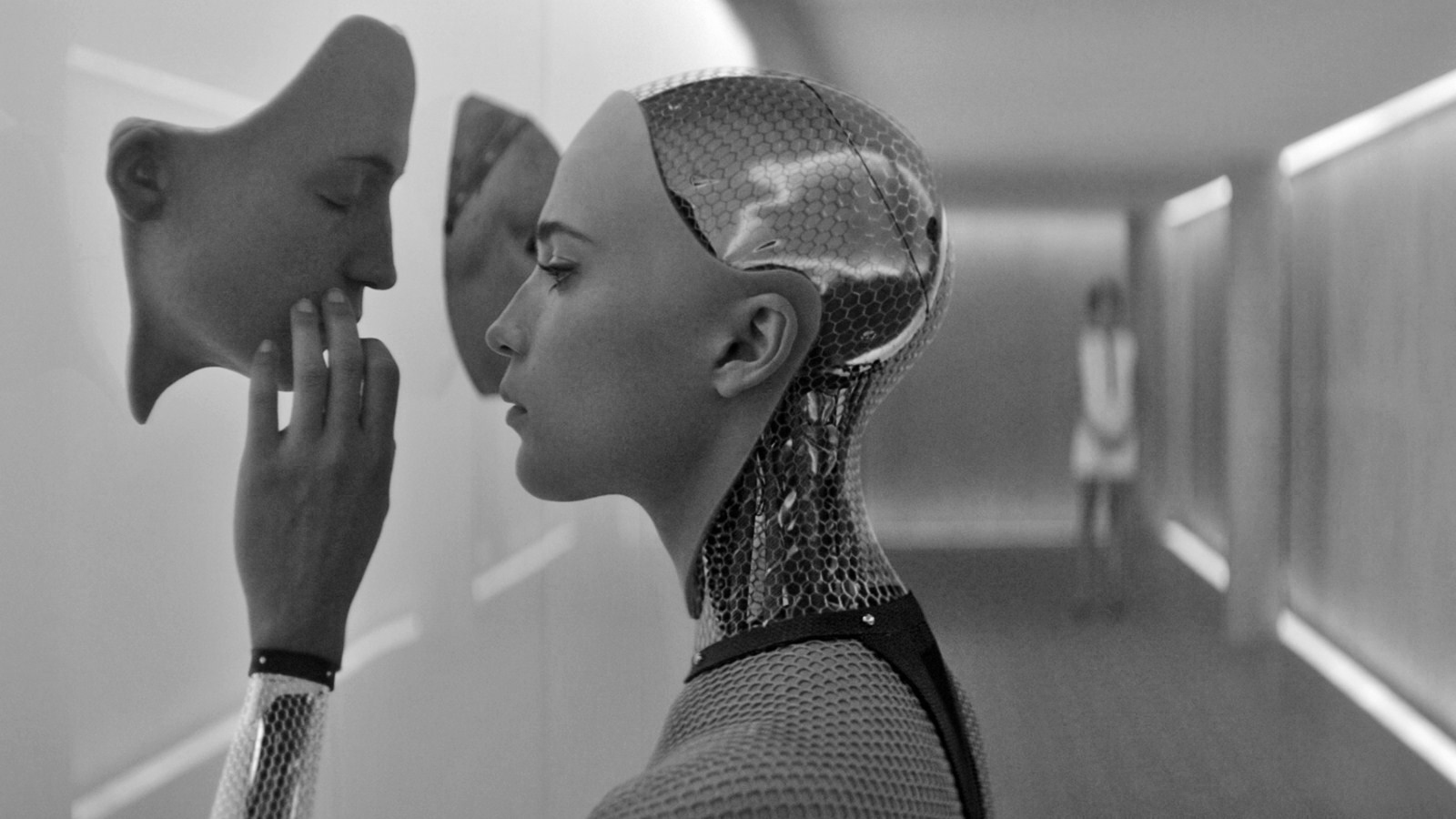

→ Garland, Alex. 2015. Ex Machina. United States: Universal Pictures.